A system for customized and interactive rehabilitation of disabled people

Researchers at the Institute for Computing Research of the University of Alicante have developed a multisensor system for rehabilitation and interaction of people with motor and cognitive disabilities. The system enables to perform different therapies using multiple modes of interaction (pose, body and hands gestures, voice, touch and gaze position) depending on the type and degree of disability. Through a training process, the system can be customized enabling the definition of patients’ own gestures for each sensor. The system is integrated with a range of applications for rehabilitation. These applications were designed in collaboration with experts from the Hospital of Seville. Examples of these applications are solving puzzles, mazes and text writing using predictive text. The system also provides a flexible and modular framework for the development of new applications focused on new therapies. We are looking for entities and companies interested in commercial agreements, technical assistance or technical cooperation.

A system for customized and interactive rehabilitation of disabled people. In this video we present the proposed system and show how users can interact with the system by using for example hand gestures. Using customized hand gestures users can do different activities like playing games, write text or even manipulate objects in a 3D viewer. The proposed system allows multiple ways of interaction: eye gaze, hand and body gestures, voice commands, tactile, etcetera.

Index

Motivation

System overview

Main advantages and innovative aspects of our technology

Videos

Supplementary material:

Cognitive activities

User study: Survey (template)

Press coverage

Motivation

According to WHO, there are more than one billion people with some kind of disability in the world and about 200 million experience considerable difficulties. In the coming years, an increased number of disabled people is expected, which is a cause of major concern. Mainly, this is because of aging, the increased risk of disability among the elderly and the global increase in chronic diseases such as diabetes, cardiovascular disease, cancer and disorders of mental health.

Among others, devices and assistive technologies increase mobility, hearing, vision and communication possibilities of disabled people. Therefore, using these technologies, they can improve their skills being able to live autonomously and participate in our society.

Nowadays, there is a wide variety of devices to carry out the rehabilitation of disabled people using new technologies. For example, there are gloves-based devices that enable an accurate representation of the hand movements or gestures. However, these systems are invasive, preventing natural interaction with patient free movements. Moreover, there are other less invasive systems based on 3D sensors to obtain the pose of patients and used for injury rehabilitation. However, these systems are constrained to high-level interaction gestures limiting therefore its application to rehabilitation therapies for patients with minor disabilities or very limited mobility. We can also find systems capable of tracking the position of the eyes, despite their use in rehabilitation therapy is still very limited by requiring a very restricted position of the patient in terms of distance and position with respect to the sensor. There are also other systems based on the use of multiple sensors but they are still unable to solve a set of problems that the presented system faces.

System overview

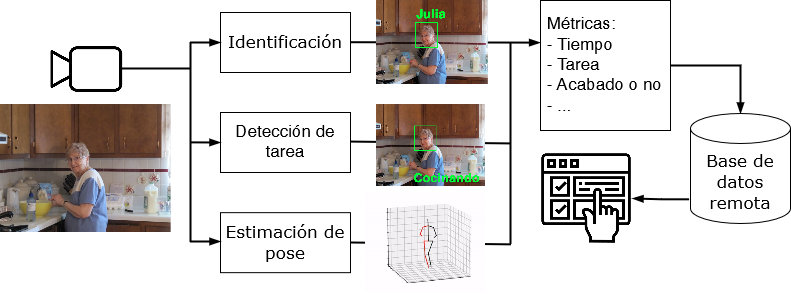

Researchers at the Institute for Computing Research of the University of Alicante have developed a new system capable of providing natural interaction to disabled people, performing motor and cognitive rehabilitation therapies. In this way, the system offers a new approach making an efficient use of new technologies and human-machine interaction sensors. The system combines the use of various sensors. A long range sensor (3D camera) that enables user identification and interaction with the system through gestures or patient own pose. This sensor also provides depth information of the scene, enabling to locate precisely the patient operation environment. The data obtained by the long-range sensor can be combined with the use of another short-range 3D sensor that provides a precise virtual representation of users’ hands. This information is used and combined with information from other sensors to perform rehabilitation therapies requiring precise movements of hands, a pose or certain fingers gestures. This sensor is located on a swivel arm, enabling its use when needed. Also, the system incorporates a gaze tracking sensor capable of obtaining the patient’s gaze position (eye tracking). This sensor is calibrated for each patient to find out the position of the patient’s eyes regarding user interfaces displayed on the tablet screen.

The sensors mentioned above are combined with a microphone array, enabling to capture users’ voice commands, and sound information from the environment. Finally, all sensors are connected to a digital tablet that processes sensors data and offers other means of additional interaction: interaction by contact. This mode of interaction also enables rehabilitation therapies or communication based on patient contact with the interfaces displayed on the tablet screen.

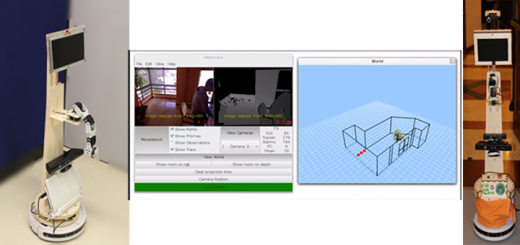

Mobile platform where sensors are mounted. This system has been deployed in a hospital for testing the system with people with disabilities. First prototype version, this set of sensors can be mounted on any robotic platform. For example, currently we are planning to integrate our current system with the robotic platform Pepper from Aldebaran robotics.

All components are mounted on a mobile platform (prototype) forming a complete system for natural interaction. The system is intended for performing therapies with patients with different disabilities.

Regarding the mode of operation, the system provides a framework for developing applications that enables rehabilitation or user communication through various human-machine interfaces. Initially, the system identifies a user in the environment leaving settings adjusted to patient’s requirements and characteristics according to their disability. This task can be carried out with a therapist or caregiver assistant who registers the user and trains the system for that patient. The system receives gestural data as input and translates it to basic commands (up, down, left, right, etc.). In addition, the system enables patient’s own gestures customization for each patient and sensor. Thereby, the creation of a different set of poses, gestures with the body, arms, head, hand, eye movement and precise hand movements (finger movements) or voice commands are possible to carry out the same actions on the system. Through these actions the user can navigate the environment, options, applications, etc., to perform rehabilitation or specific communication with a specific application.

Each patient can be recognized in the system using biometric identification (facial recognition) and can also define a set of sensors and a set of custom gestures to be used depending on the type of disability. Through this customization and the proper sensors calibration, the system ensures the best user adaptation offering the most natural interface.

The system includes a set of applications enabling both interaction and patient rehabilitation. In particular, several applications taking the basic commands as input have been developed: predictive text application (convert text to speech); application motor rehabilitation (through virtual object manipulation) and cognitive rehabilitation (through guided drawing). However, this initial set of applications is extensible and adaptable according to the different needs of patients. In this way, the system provides a flexible and modular workspace for new therapies development.

First prototype of this technology has been deployed in a Hospital (Seville, Spain). Currently, doctors are evaluating and testing this system to see how patients can use it for rehabilitation. The plan is also to deliver some of these platforms to patients houses, so they can continue the rehabilitation process at home with the help of some family members.

Main advantages and innovative aspects of our technology

- Allows to perform interactively and custom rehabilitation therapies for people with different motor and cognitive disabilities.

- Enables multiple modes of interaction (gestures, poses / hand movement, voice, eye gaze, and touch) for people with different disabilities offering significant advantages over other systems.

- Enables customization of patient own gestures for each sensor providing a natural and custom interaction experience with the system.

- Provides biometric identification (facial recognition) adapting the interaction (profiles) depending on the user disability level.

- Combines the data obtained from the sensors with 3D interfaces. The system provides a more realistic way of rehabilitation through the use of advanced virtual reality techniques.

- It provides a flexible and modular workspace for the development of new applications oriented to new therapies based on the different needs of the patients.

- Uses sensors and devices available on the market, therefore, can be modified, adapted and replicated easily at a reasonable cost depending on the type of patient, disabilities and therapies to implement.

- Provides a mobile platform that can be used by patients at home.

Videos

Below we can watch some videos of the system:

The left video shows how the system is calibrated and trained for using the body gesture sensor. After this process we show on the right video how the user is able to control the application and to solve some puzzles just by using simple gestures. Sensors can be predefined for each user depending on his/her disabilities.

The left video shows how the system is calibrated and trained for using the eye tracking sensor. After the calibration process we show on the right video how the user can navigate through the application using the eye gaze and how the system enables user to solve puzzles and play other games just by user eye gaze

The video below shows how the system can be customized to learn gestures and different ways of interaction according to users disabilities. For example, we trained the system for recognizing Schaeffer language. This is a special gesture set that was developed by Schaeffer et al. to communicate with people with cognitive disabilities.

The system was trained from data from multiple users. It shows how we can customize the way users interact with our system and how new gestures can be defined for people with different disabilities.

Earlier version of the system

Earlier version of the system. It shows how the system is able to track and identify the user and then interact with him/her by using different sensors (hand gestures, eye gaze, etcetera).

Supplementary material:

Below we made available supplementary material that contains a detailed description about the cognitive activities that we have designed and implemented on this platform. Moreover, we also made available a survey that we used for conducting a user study.

Cognitive activities:

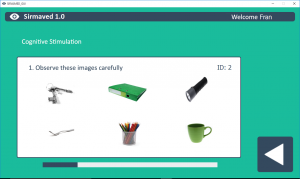

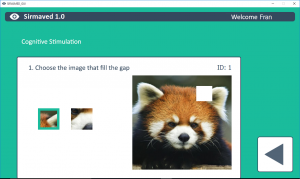

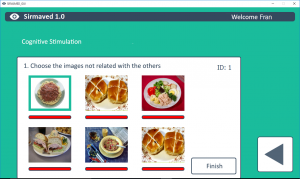

Left: Choose among six activity. Right: Fill the gap activity.

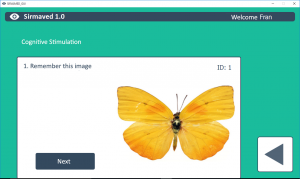

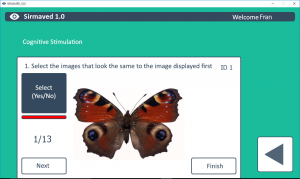

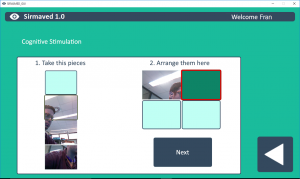

Left: Memory Carousel: first step. Right: Memory Carousel: second step.

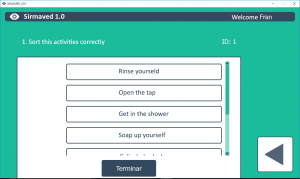

Left: Temporal Sequence activity. Right: Multiple Choice activity.

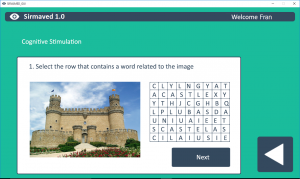

Left: Puzzle activity. Right: Word Search activity.

User study: survey (template)

Cognitive activities: description and main cognitive areas that are rehabilitated through these activities.

Cognitive activities: description and main cognitive areas that are rehabilitated through these activities.

Press coverage:

This technology is protected by patent:

- Application number: 201531430

- Application date: 05/10/2015

1 respuesta

[…] Se ha presentado y obtenido una patente relacionada con el sistema desarrollado dentro de este objetivo. Los detalles se pueden consultar en http://www.rovit.ua.es/patente/ […]