SIRMAVED: Desarrollo de un sistema integral robótico de monitorización e interacción para personas con daño cerebral adquirido y dependientes.

Este proyecto ha sido financiado por el Ministerio de Economía y Competitividad del Gobierno de España dentro del Programa Estatal de I+D+i Orientada a los Retos de la Sociedad y confinanciado con fondos Feder europeos. LA referencia del proyecto es DPI2013-40534-R y el título completo: SIRMAVED: DESARROLLO DE UN SISTEMA INTEGRAL ROBOTICO DE MONITORIZACION E INTERACCION PARA PERSONAS CON DAÑO CEREBRAL ADQUIRIDO Y DEPENDIENTES

La atención de personas dependientes, ya sea por enfermedad, accidente, discapacidad, o envejecimiento, es una de las líneas prioritarias de investigación en los países desarrollados en la actualidad. Esta atención, además de servir de ayuda y compañía, se está planteando que sea incluso terapeútica. Por otra parte, se pretende que dicha atención sea en el hogar de la persona, con el objetivo de minimizar el coste de las terapias.

Para dar solución a este desafío, el principal objetivo científico que persigue este proyecto es fomentar la salud y el bienestar de la sociedad a partir del diseño, desarrollo y evaluación de una terapia novedosa de rehabilitación cognitiva para personas con daño cerebral adquirido o dependientes. Esta terapia estará basada en el diseño y uso de un ambiente inteligente de monitorización activa y de un robot social autónomo de estimulación interactiva en el hogar. Esto implica la integración de ciertas tecnologías ya existentes, así como aportar soluciones a una diversidad de retos tecnológicos que este tipo de sistemas lleva emparejados. Para ello, se cuenta con un equipo multidisciplinar capaz de abordar cada uno de ellos por separado, y así proporcionar una solución global integradora.

Además, se plantea una evaluación experimental con pacientes reales, la cual será llevada a cabo por profesionales clínicos que valorarán la eficacia del sistema en la mejora de la calidad de vida de las personas dependientes. La evaluación será llevada a cabo evaluando tanto la autonomía como el estado cognitivo–afectivo positivo del paciente.

Para conseguir el objetivo general propuesto es necesario abordar ciertos retos científicos-tecnológicos que se desglosan en los siguientes objetivos específicos: i) desarrollar un sistema inteligente de monitorización del entorno, que permita realizar la localización y el seguimiento, de manera precisa, de los agentes móviles presentes en un escenario; ii) desarrollar un sistema de interacción multimodal humano-robot que proporcione funcionalidades de interacción de la forma más humana posible; iii) desarrollar un sistema de reconocimiento y manipulación de objetos 3D de tamaño reducido a bordo del robot para proporcionar ayuda al paciente; iv) desarrollar técnicas de auto localización robusta topológica/métrica para dotar al robot de libertad de movimiento y autonomía; v) desarrollar un sistema cognitivo que permita al robot comportarse de manera inteligente; vi) realizar un diseño del escenario de atención y rehabilitación, e identificar métricas, pilotaje y evaluación final del sistema desarrollado con escenarios y pacientes reales; y por último vii) difundir y divulgar los resultados obtenidos a la comunidad científica y a empresas y asociaciones relacionadas con el sector.

Los resultados esperados de este proyecto son de diversa índole. A nivel científico-técnico se espera conseguir avances significativos en las distintas tecnologías a desarrollar. En cuanto al impacto social se pretende mejorar la calidad de vida de los pacientes con daño cerebral adquirido o dependientes. En cuanto al impacto económico, se espera poder obtener un sistema de bajo coste, así como una posible comercialización del mismo, lo que implicará la puesta en marcha de una empresa de base tecnológica, aumentar la transferencia de tecnología hacia la sociedad y permitir a nuevos investigadores continuar desarrollando su carrera, fomentando así el empleo en el área de las TIC.

Objetivos

Para conseguir el objetivo general propuesto es necesario abordar ciertos retos científicos-tecnológicos los cuales se desglosan en los siguiente objetivos específicos:

1.Diseñar un sistema inteligente de monitorización del entorno, basado en sensores visuales de bajo coste, que permita realizar la localización y el seguimiento, de manera precisa, de los agentes móviles (individuos, robots, y objetos) presentes en un escenario. En este sentido, el sistema deberá proporcionar mecanismos de actuación (activación de alarmas, avisos a servicios de urgencias, solicitar la asistencia de un humano, etc.) ante situaciones de riesgo o de peligro para las personas (caídas del paciente, descuidos que afecten a la autonomía de la persona, etc.). Por otra parte, toda información recopilada por este sistema será enviada al robot (mediante protocolos de comunicación previamente establecidos) para mejorar las capacidades perceptivas y deliberativas del mismo. De manera dual, el robot también enviará su propia información perceptiva a este sistema con el mismo objetivo.

2.Desarrollar un sistema interacción multimodal humano-robot (mediante lenguaje hablado y gestual). Este sistema deberá proporcionar funcionalidades de interacción, tanto en lo referente a la percepción de la persona (detección de emociones, reconocimiento y comprensión del habla, …) como en lo referente a la generación de gestos socialmente perceptibles por los humanos (verbales y no-verbales).

3.Desarrollar un sistema de reconocimiento y manipulación de objetos 3D de tamaño reducido a bordo del robot. El sistema deberá ser robusto frente a oclusiones y cambios de iluminación.

4.Desarrollar técnicas de auto localización robusta topológica/métrica para el robot dentro de un entorno conocido. Por un lado, se extenderán técnicas clásicas de localización topológica mediante el uso sensores RGBD, lo cual permitirá tratar con los problemas típicos de cambios iluminación, incluso en ausencia total de la misma. Por otro lado, se desarrollarán técnicas que permitan mantener mapas geométricos del entorno a largo plazo, y de aplicación al problema del SLAM en robots móviles. En concreto, se desarrollarán técnicas tanto para SLAM RGBD como para SLAM visual.

5.Desarrollar un sistema cognitivo que permita desarrollar un comportamiento inteligente por parte de un robot manipulador, integrando las técnicas desarrolladas en los objetivos anteriores. El sistema desarrollado dotará al robot de capacidades de navegación autónoma, interacción natural, manipulación, así como la posibilidad de tomar decisiones tanto reactivas como deliberativas, permitiendo al robot su actuación en los entornos propuestos. Esta integración permitirá al robot actuar como robot de compañía en el hogar, ayudando a la persona dependiente en sus tareas cotidianas de rehabilitación. Este objetivo incluye la prueba y puesta en práctica de las capacidades anteriormente mencionadas en diferentes plataformas robóticas.

6.Realizar un diseño del escenario de atención y rehabilitación, e identificar métricas, pilotaje y evaluación final del sistema desarrollado con escenarios y pacientes reales. Se realizará investigación de nuevos mecanismos de estimulación para los escenarios objetos de este proyecto. Serán identificadas las métricas para la valoración de los aspectos fundamentales en la rehabilitación del paciente en estos escenarios, así como el diseño de la evaluación de la plataforma robótica y de los resultados de su uso tras el pilotaje con paciente reales.

7.Realizar una coordinación de los objetivos planteados y la difusión de los resultados del proyecto.

Evolución del cumplimiento de los objetivos

Para cada objetivo indicamos los resultados obtenidos y su grado de consecución.

Esta actividad ha sido desarrollada en su totalidad. El sistema es portable y se puede instalar en cualquier vivienda. Usa sensores 3D de bajo coste que permiten obtener tanto información de apariencia (imágenes tradicionales RGB) como datos de profundidad (3D). Esto permite trabajar en ausencia de iluminación. El sistema ha sido implantado en varias residencias de ancianos. No se han realizado publicaciones del sistema porque está pendiente de patente. Se puede ver un vídeo del sistema funcionando en https://www.youtube.com/watch?v=HEtZRH8CwKI Dentro del proyecto, hemos realizado la implantación de este sistema en una vivienda de ADACEA y en la residencia de APSA en Alicante. En [1] se describe una manera alternativa de supervisión usando cámaras tradicionales. En [2] se exploran las posibilidad es de reconocimiento de comportamiento usando cámaras de vigilancia.

Progreso y consecución del objetivo: Este objetivo se ha cumplido al 100%, tanto en los hitos a conseguir como en los entregables a realizar. Todo dentro de los plazos establecidos

Actividad 2: Desarrollar un sistema de interacción multimodal humano-robot

Esta actividad es la más extensa del proyecto. Por un lado, se ha desarrollado el sistema de reconocimiento del habla y el sistema de comprensión y síntesis de lenguaje natural completado, probado en la competición Rockin.

Por otro lado, se ha realizado un sistema de reconocimiento de gestos del lenguaje de Schaeffer, usado por las personas con discapacidad cognitiva [3], [4]. Ver vídeo https://www.youtube.com/watch?v=71XN0S43BXQ&feature=youtu.be

Se ha presentado y obtenido una patente relacionada con el sistema desarrollado dentro de este objetivo. Los detalles se pueden consultar en http://www.rovit.ua.es/patente/

Progreso y consecución del objetivo: Este objetivo se ha cumplido al 100%, dentro de los plazos establecidos.

Actividad 3: Desarrollar un sistema de reconocimiento y manipulación de objetos 3D

Al empezar a trabajar con datos 3D en el proyecto, tuvimos que desarrollar métodos de compresión de estos datos. [5] desarrolla uno de los primeros métodos de compresión aparecidos en la literatura.

Con respecto a reconocimiento de objetos, [6] presenta una manera de mejorar su reconstrucción a partir de datos 3D. [7, 8] presentan dos estudios sobre tolerancia al ruido para reconocimiento de objetos 3D. [9] estudia la categorización de objetos mediante características 3D locales. Tanto la tesis [10] como la [11] se centraron en los métodos desarrollados es esta actividad. http://www.rovit.ua.es/tfgAlbert_video.mp4 En [12] se empieza a trabajar con secuencias de datos 3D.

Recientemente hemos empezado a trabajar con deep learning aplicado a reconocimiento de datos 3D [13], desarrollando un dataset para testeo [14]. También hemos trabajado con manejo de datos 3D. Cabe destacar el dataset desarrollado para compresión 3D [15]. También hemos desarrollado un método de suavizado de color en datos 3D muy eficiente [16]

Progreso y consecución del objetivo: Este objetivo se ha cumplido al 100%, dentro de los plazos establecidos.

Actividad 4: Desarrollar técnicas de autolocalización robusta para el robot dentro de un entorno conocido

Se han explorado nuevas vías de representación de mapas 3D, dado su complejidad de manejo. [17] describe un nuevo método de mapeado. Se han explorado los métodos existentes de registro 3D [18]. En [19] se presenta un nuevo método basado en GNG que permite asociarlo a [17]. [20] describe un nuevo método de percepción activa para humanoides. [21] explora la tolerancia a ruido de las redes GNG para mapeado. [22] explora la manera de extraer planos de manera más eficiente. En [23] se propone un framework de desarrollo para localización semántica (de escenas) y en [13] se exploran las posibilidades de este tipo de localización. [24] presenta un dataset usado en nuestra experimentación, que junto a [23] supone uno de los más completos. [25] permite el procesamiento de secuencias de datos 3D con GNG. En [26] se empieza explorar la posibilidad de usar deep learning para realizar reconocimiento de escenas. En [27] y [28] se presenta una primera aproximación a la construcción de mapas semánticos.

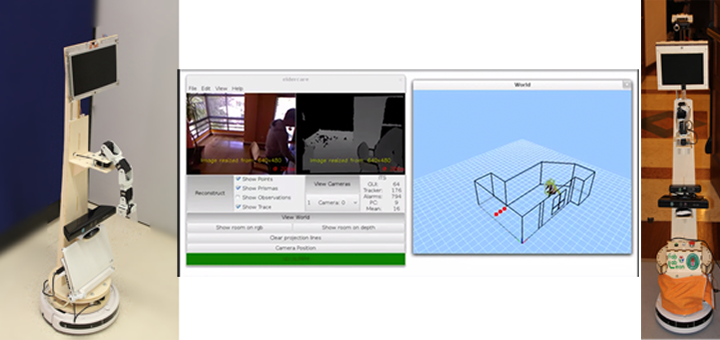

Progreso y consecución del objetivo: Este objetivo se ha cumplido al 100%, dentro de los plazos establecidos.

Actividad 5: Desarrollar un sistema cognitivo que permita desarrollar un comportamiento inteligente por parte de un robot manipulador, integrando las técnicas desarrolladas en los objetivos anteriores.

En cuanto a la aceleración de métodos mediante GPU, [29] permite estimar el movimiento en tiempo real usando estos dispositivos. [21] permite reconstruir un entorno 3D mediante GPU. [30] explora la implementación en GPU de algoritmos de registro 3D. En [31] se ha desarrollado un sistema para reconocimiento de objetos usando GPU para ser integrado en una Jetson y poder ser desplegado en un robot móvil.

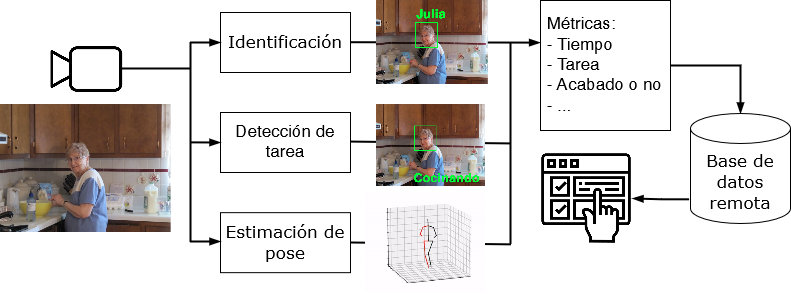

[32, 33, 34, 35, 36] presentan las distintas variantes de la plataforma robótica que se usará como prototipo. Estamos desarrollando un sistema independiente del robot (pero que puede ser integrado en él) que permite la interacción y la rehabilitación con pacientes con daño cerebral adquirido. Realizaremos el pilotaje con pacientes reales usando este sistema. No se han realizado publicaciones pues está pendiente de patente http://www.rovit.ua.es/sirmaved_multiSensorApp.mp4

Progreso y consecución del objetivo: Este objetivo se ha cumplido al 100%, dentro de los plazos establecidos.

Actividad 6: Diseñar un escenario de atención y rehabilitación, e identificar metricas, pilotaje y evaluacion final del sistema desarrollado con escenarios y pacientes reales.

Se han realizado el diseño del escenario de atención y rehabilitación y las métricas de evaluación de la interación social, atención y rehabilitación del paciente

Progreso y consecución del objetivo: Este objetivo se ha cumplido al 100%, dentro de los plazos establecidos.

Actividad 7: Realizar una coordinación de los objetivos planteados y la difusión los resultados del proyecto

Se han realizado todas las actividades de coordinación (reuniones periódicas, ya sea presenciales o por videoconferencia). La página web del proyecto está visible en http://www.rovit.ua.es/?p=176

Disponemos de un carpeta en GDrive para la gestión interna del proyecto, donde disponemos de las actas de las reuniones realizadas así como los vídeos y la documentación asociada a las hitos.

Se ha publicado un artículo en congreso [37] difundiendo los resultados del proyecto y su estructura.

También se ha difundido el proyecto con una nota de prensa http://web.ua.es/es/actualidad-universitaria/2015/abril2015/abril2015-1-5/tecnologia-y-robotica-aplicada-al-bienestar-de-personas-con-dano-cerebral-adquirido-y-dependientes.html

Como resultado del proyecto también hemos empezado a colaborar con la Fundación Casaverde, especialista en el tratamiento de este tipo de pacientes. Hemos firmado un convenio con ellos y la Universidad de Alicante http://web.ua.es/es/actualidad-universitaria/2016/julio16/1-10/la-universidad-de-alicante-firma-un-convenio-para-la-rehabilitacion-de-personas-con-dano-cerebral-con-la-fundacion-casaverde.html

Noticias aparecidas en prensa relacionadas con el sistema de interacción y comunicación:

Progreso y consecución del objetivo: Este objetivo se ha cumplido al 100%, dentro de los plazos establecidos.

Personal

- Miguel Cazorla (Investigador principal)

- José García Rodríguez (Investigador principal)

- Domingo Gallardo

- María Isabel Alfonso

- Diego Viejo

- Antonio Botía

- Vicente Matellán (Universidad de León)

- José María Cañas (Universidad Rey Juan Carlos)

- Francisco Martín (Universidad Rey Juan Carlos)

- Cristina Suárez (Hospital Virgen del Rocío de Sevilla)

Resultados del proyecto

Avances obtenidos en el proyecto

La atención de personas dependientes, ya sea por enfermedad, accidente, discapacidad, o envejecimiento, es una de las líneas prioritarias de investigación en los países desarrollados en la actualidad. Esta atención, además de servir de ayuda y compañía, se está planteando que sea incluso terapeútica. Por otra parte, se pretende que dicha atención sea en el hogar de la persona, con el objetivo de minimizar el coste de las terapias.

Los principales avances obtenidos en este proyecto van encaminados, por un lado, a la mejora de la comunicación entre un paciente dependiente y el médico o rehabilitador. Por el otro, se persigue que el paciente pueda tener una ayuda externa.

Uno de los avances obtenidos es el desarrollo de un sistema de ambiente inteligente que permite la monitorización de personas en un entorno (hogar o residencia). Este sistema es robusto a la iluminación (es capaz de trabajar en la oscuridad) por lo que permite estar funcionando en 24/7. Se ha realizado la instalación de este sistema en una vivienda de un paciente y en varias residencias (pacientes con discapacidad cognitiva), siendo capaz de detectar situaciones críticas.

Otro de los avances tiene que ver con la comunicación entre pacientes y médicos o rehabilitadores. Los pacientes con daño cerebral adquirido suelen tener una discapacidad en función del daño sufrido. Así, no encontramos dos pacientes que compartan discapacidad. Por ello, hemos desarrollado un sistema de comunicación que es capaz de adaptarse a esta diversidad. Usando distintos tipos de sensores (cámaras 3D, detección de manos, seguimiento de ojos), el sistema aprende los gestos que el usuario puede realizar y así el médico o rehabilitador puede realizar la terapia a un mayor número de pacientes. Este sistema se ha integrado en una plataforma que permite desarrollar aplicaciones para rehabilitación, basándose en los gestos que realiza el paciente. El sistema está patentado y ha sido probado con éxito en el Hospital Virgen del Rocío de Sevilla.

Por último, se han conseguido avances en diversas áreas más cercanas a la investigación básica, como es el caso del reconocimiento de objetos, de gestos, localización semántica del robot, planificación del movimiento del robot, etc. Estos avances han sido necesarios

Noticia en el portal de la Universidad de Alicante

Sistema de interacción y rehabilitación

Tesis defendidas

- Vicente Morell Giménez: Contributions to 3D data processing. Supervisors: José García-Rodríguez and Miguel Cazorla. Date: october 2014. European mention

- Javier Montoyo Bojo: Estudio y mejora de métodos de registro 3D: aceleración sobre unidades de procesamiento gráfico y caracterización del espacio de transformaciones iniciales. Supervisors: Miguel Cazorla and José García-Rodríguez. Date: november 2015.

- Javier Navarrete: Contribuciones al suavizado de color, compresión y registro de nubes de puntos 3D. Supervisors: Miguel Cazorla and Diego Viejo. Date: may 2016.

Congresos y revistas

[Bibtex]

@article{jsan3020095,

abstract = {In this work, we present a multi-camera surveillance system based on the use of self-organizing neural networks to represent events on video. The system processes several tasks in parallel using GPUs (graphic processor units). It addresses multiple vision tasks at various levels, such as segmentation, representation or characterization, analysis and monitoring of the movement. These features allow the construction of a robust representation of the environment and interpret the behavior of mobile agents in the scene. It is also necessary to integrate the vision module into a global system that operates in a complex environment by receiving images from multiple acquisition devices at video frequency. Offering relevant information to higher level systems, monitoring and making decisions in real time, it must accomplish a set of requirements, such as: time constraints, high availability, robustness, high processing speed and re-configurability. We have built a system able to represent and analyze the motion in video acquired by a multi-camera network and to process multi-source data in parallel on a multi-GPU architecture.},

author = {Orts-Escolano, sergio and Garcia-Rodriguez, Jose and Morell, Vicente and Cazorla, Miguel and Azorin, Jorge and Garcia-Chamizo, Juan Manuel},

doi = {10.3390/jsan3020095},

issn = {2224-2708},

journal = {Journal of sensor and Actuator Networks},

keywords = {growing neural gas; camera networks; visual survei},

number = {2},

pages = {95--112},

title = {{Parallel Computational Intelligence-Based Multi-Camera surveillance system}},

url = {http://www.mdpi.com/2224-2708/3/2/95},

volume = {3},

year = {2014}

}[Bibtex]

@article{Azorin2016b,

abstract = {Human behaviour recognition has been, and still remains, a challenging problem that involves different areas of computational intelligence. The automated understanding of people activities from video sequences is an open research topic in which the computer vision and pattern recognition areas have made big efforts. In this paper, the problem is studied from a prediction point of view. We propose a novel method able to early detect behaviour using a small portion of the input, in addition to the capabilities of it to predict behaviour from new inputs. specifically, we propose a predictive method based on a simple representation of trajectories of a person in the scene which allows a high level understanding of the global human behaviour. The representation of the trajectory is used as a descriptor of the activity of the individual. The descriptors are used as a cue of a classification stage for pattern recognition purposes. Classifiers are trained using the trajectory representation of the complete sequence. However, partial sequences are processed to evaluate the early prediction capabilities having a specific observation time of the scene. The experiments have been carried out using the three different dataset of the CAVIAR database taken into account the behaviour of an individual. Additionally, different classic classifiers have been used for experimentation in order to evaluate the robustness of the proposal. Results confirm the high accuracy of the proposal on the early recognition of people behaviours.},

author = {{Jorge Azorin-Lopez, Marcelo saval-Calvo, Andres Fuster-Guillo}, Jose Garcia-Rodriguez},

journal = {Neural Processing Letters},

number = {2},

pages = {363--387},

title = {{A novel prediction method for early recognition of global human behaviour in image sequences}},

volume = {43},

year = {2015}

}[Bibtex]

@inproceedings{Gomez2015,

abstract = {In this paper we present a new interaction system for schaeffer's gesture language recognition. It uses the information provided by an RGBD camera to capture body motion and recognize gestures. schaeffer's gestures are a reduced set of gestures designed for people with cognitive disabilities. The system is able to send alarms to an assistant or even a robot for human robot interaction.},

author = {Francisco Gomez-Donoso and Miguel Cazorla},

booktitle= {Actas de la Conferencia de la Asociacion Espanola para la Inteligencia Artificial (CAEPIA)},

keywords = {3d gesture recognition,shaeffer's gestures,human robot in-teraction},

title = {{Recognizing schaeffer's Gestures for Robot Interaction}},

url = {http://simd.albacete.org/actascaepia15/papers/01045.pdf},

year = {2015}

}[Bibtex]

@article{Gomez-Donoso2016,

abstract = {schaeffer's sign language consists of a reduced set of gestures designed to help children with autism or cognitive learning disabilities to develop adequate communication skills. Our automatic recognition system for schaeffer's gesture language uses the information provided by an RGB-D camera to capture body motion and recognize gestures using Dynamic Time Warping combined with k-Nearest Neighbors methods. The learning process is reinforced by the interaction with the proposed system that accelerates learning itself thus helping both children and educators. To demonstrate the validity of the system, a set of qualitative experiments with children were carried out. As a result, a system which is able to recognize a subset of 11 gestures of schaeffer's sign language online was achieved.},

author = {Gomez-Donoso, Francisco and Cazorla, Miguel and Garcia-Garcia, Alberto and Garcia Rodriguez, Jose},

journal = {Expert systems},

title = {Automatic schaeffer's Gestures Recognition system},

volume={33},

number={5},

pages={480--488},

year = {2016}

}[Bibtex]

@Article{Morell2014,

author = {Morell, Vicente and Orts-Escolano, sergio and Cazorla, Miguel and Garcia-Rodriguez, Jose},

title = {{Geometric 3D point cloud compression}},

journal = {Pattern Recognition Letters},

year = {2014},

volume = {50},

pages = {55--62},

abstract = { The use of 3D data in mobile robotics applications provides valuable information about the robot's environment but usually the huge amount of 3D information is unmanageable by the robot storage and computing capabilities. A data compression is necessary to store and manage this information but preserving as much information as possible. In this paper, we propose a 3D lossy compression system based on plane extraction which represent the points of each scene plane as a Delaunay triangulation and a set of points/area information. The compression system can be customized to achieve different data compression or accuracy ratios. It also supports a color segmentation stage to preserve original scene color information and provides a realistic scene reconstruction. The design of the method provides a fast scene reconstruction useful for further visualization or processing tasks. },

doi = {http://dx.doi.org/10.1016/j.patrec.2014.05.016},

issn = {0167-8655},

keywords = {3D data; Compression; Kinect}

}[Bibtex]

@inproceedings{Orts20143d,

author = {Orts-Escolano, sergio and Garcia-Rodriguez, Jose and Morella, Vicente and Cazorla, Miguel and Garcia-Chamizo, Juan Manuel},

booktitle = {Neural Networks (IJCNN), The 2014 International Joint Conference on},

title = {{3D Colour Object Reconstruction based on Growing Neural Gas}},

year = {2014}

}[Bibtex]

@inproceedings{rangel2015object,

title={Object Recognition in Noisy RGB-D Data},

author={Rangel, Jos{\'e} Carlos and Morell, Vicente and Cazorla, Miguel and Orts-Escolano, sergio and Garc{\'\i}a-Rodr{\'\i}guez, Jos{\'e}},

booktitle={International Work-Conference on the Interplay Between Natural and Artificial Computation},

pages={261--270},

year={2015},

organization={springer International Publishing}

}[Bibtex]

@article{Rangel2016,

abstract = {Finding an appropriate image representation is a crucial problem in robotics. This problem has been classically addressed by means of computer vision techniques, where local and global features are used. The selection or/and combination of different features is carried out by taking into account repeatability and distinctiveness, but also the specific problem to solve. In this article, we propose the generation of image descriptors from general purpose semantic annotations. This approach has been evaluated as source of information for a scene classifier, and specifically using Clarifai as the semantic annotation tool. The experimentation has been carried out using the ViDRILO toolbox as benchmark, which includes a comparison of state-of-the-art global features and tools to make comparisons among them. According to the experimental results, the proposed descriptor performs similarly to well-known domain-specific image descriptors based on global features in a scene classification task. Moreover, the proposed descriptor is based on generalist annotations without any type of problem-oriented parameter tuning.},

author = {Jose Carlos Rangel and Miguel Cazorla and Ismael Garcia-Varea and Jesus Martinez-Gomez and Elisa Fromont and Marc sebban},

doi = {10.1080/01691864.2016.1164621},

journal = {Advanced Robotics},

number = {11--12},

pages = {758--769},

title = {{scene Classification from semantic Labeling}},

volume = {30},

year = {2016}

}[Bibtex]

@inproceedings{MartinezRobot2015,

author = {Martinez-Gomez, Jesus and Cazorla, Miguel and Garcia-Varea, Ismael and Romero-Gonzalez, Cristina},

booktitle = {2nd Iberian robotics conference},

title = {{Object categorization from RGB-D local features and Bag Of Words}},

year = {2015}

}[Bibtex]

@PHDTHEsIs{MorellThesis,

author = {Morell, Vicente},

supervisor={Miguel Cazorla and Jose Garcia-Rodriguez},

title = {Contributions to 3D data processing},

year = {2014}

}[Bibtex]

@inproceedings{orts2015optimized,

title={Optimized Representation of 3D sequences Using Neural Networks},

author={Orts-Escolano, sergio and Garcia-Rodriguez, Jose and Morell, Vicente and Cazorla, Miguel and Garcia-Garcia, Alberto and Ovidiu-Oprea, sergiu},

booktitle={International Work-Conference on the Interplay Between Natural and Artificial Computation},

pages={251--260},

year={2015},

organization={springer International Publishing}

}[Bibtex]

@inproceedings{Garcia2016a,

author = {Garcia-Garcia, Albert and Gomez-Donoso, Francisco and Garcia-Rodriguez, Jose and Orts-Escolano, sergio and Cazorla, Miguel and Azorin-Lopez, Jorge},

booktitle = {The IEEE World Congress on Computational Intelligence},

title = {{PointNet: A 3D Convolutional Neural Network for Real-Time Object Class Recognition}},

year = {2016}

}[Bibtex]

@INPROCEEDINGs{Garcia2016,

author={A. Garcia-Garcia and F. Gomez-Donoso and J. Garcia-Rodriguez and s. Orts-Escolano and M. Cazorla and J. Azorin-Lopez},

booktitle={2016 International Joint Conference on Neural Networks (IJCNN)},

title={PointNet: A 3D Convolutional Neural Network for real-time object class recognition},

year={2016},

pages={1578-1584},

keywords={CAD;computer vision;data structures;learning (artificial intelligence);neural net architecture;object recognition;3D shapeNets;3D convolutional neural network;ModelNet;PointNet;VoxNet;computer vision;deep learning techniques;density occupancy grids representations;large-scale 3D CAD model dataset;real-time object class recognition;supervised convolutional neural network architecture;Computer architecture;Machine learning;Neural networks;Object recognition;solid modeling;Three-dimensional displays;Two dimensional displays},

month={July},}[Bibtex]

@article{Navarrete2016Ras,

abstract = {The use of 3D data in mobile robotics applications provides valuable information about the robot's environment. However usually the huge amount of 3D information is difficult to manage due to the fact that the robot storage system and computing capabilities are insufficient. Therefore, a data compression method is necessary to store and process this information while preserving as much information as possible. A few methods have been proposed to compress 3D information. Nevertheless, there does not exist a consistent public benchmark for comparing the results (compression level, distance reconstructed error, etc.) obtained with different methods. In this paper, we propose a dataset composed of a set of 3D point clouds with different structure and texture variability to evaluate the results obtained from 3D data compression methods. We also provide useful tools for comparing compression methods, using as a baseline the results obtained by existing relevant compression methods.},

author = {Javier Navarrete and Vicente Morell and Miguel Cazorla and Diego Viejo and Jose Garcia-Rodriguez and sergio Orts-Escolano},

journal = {Robotics and Autonomous systems},

pages = {550--557},

title = {{3DCOMET: 3D Compression Methods Test Dataset}},

volume = {75, Part B},

year = {2016}

}[Bibtex]

@article{navarrete2016color,

title={Color smoothing for RGB-D data using entropy information},

author={Navarrete, Javier and Viejo, Diego and Cazorla, Miguel},

journal={Applied soft Computing},

volume={46},

pages={361--380},

year={2016},

publisher={Elsevier}

}[Bibtex]

@InProceedings{Morell20143d,

author = {Morell, Vicente and Cazorla, Miguel and Orts-Escolano, sergio and Garcia-Rodriguez, Jose},

title = {{3D Maps Representation using GNG}},

booktitle = {Neural Networks (IJCNN), The 2014 International Joint Conference on},

year = {2014}

}[Bibtex]

@article{morell2014comparative,

abstract = {The use of RGB-D sensors for mapping and recognition tasks in robotics or, in general, for virtual reconstruction has increased in recent years. The key aspect of these kinds of sensors is that they provide both depth and color information using the same device. In this paper, we present a comparative analysis of the most important methods used in the literature for the registration of subsequent RGB-D video frames in static scenarios. The analysis begins by explaining the characteristics of the registration problem, dividing it into two representative applications: scene modeling and object reconstruction. Then, a detailed experimentation is carried out to determine the behavior of the different methods depending on the application. For both applications, we used standard datasets and a new one built for object reconstruction.},

author = {Morell-Gimenez, Vicente and saval-Calvo, Marcelo and Azorin-Lopez, Jorge and Garcia-Rodriguez, Jose and Cazorla, Miguel and Orts-Escolano, sergio and Fuster-Guillo, Andres},

doi = {10.3390/s140508547},

issn = {1424-8220},

journal = {sensors},

keywords = {RGB-D sensor; registration; robotics mapping; obje},

month = {may},

number = {5},

pages = {8547--8576},

publisher = {Multidisciplinary Digital Publishing Institute},

title = {{A Comparative study of Registration Methods for RGB-D Video of static scenes}},

url = {http://www.mdpi.com/1424-8220/14/5/8547},

volume = {14},

year = {2014}

}[Bibtex]

@article{viejo2014combining,

abstract = {The use of 3D data in mobile robotics provides valuable information about the robot's environment. Traditionally, stereo cameras have been used as a low-cost 3D sensor. However, the lack of precision and texture for some surfaces suggests that the use of other 3D sensors could be more suitable. In this work, we examine the use of two sensors: an infrared sR4000 and a Kinect camera. We use a combination of 3D data obtained by these cameras, along with features obtained from 2D images acquired from these cameras, using a Growing Neural Gas (GNG) network applied to the 3D data. The goal is to obtain a robust egomotion technique. The GNG network is used to reduce the camera error. To calculate the egomotion, we test two methods for 3D registration. One is based on an iterative closest points algorithm, and the other employs random sample consensus. Finally, a simultaneous localization and mapping method is applied to the complete sequence to reduce the global error. The error from each sensor and the mapping results from the proposed method are examined.},

author = {Viejo, Diego and Garcia-Rodriguez, Jose and Cazorla, Miguel},

journal = {Information sciences},

keywords = {GNG; sLAM; 3D registration},

pages = {174--185},

title = {{Combining Visual Features and Growing Neural Gas Networks for Robotic 3D sLAM}},

volume = {276},

year = {2014}

}[Bibtex]

@article{Martin2015,

abstract = {Robots detect and keep track of relevant objects in their environment to accomplish some tasks. Many of them are equipped with mobile cameras as the main sensors, process the images and maintain an internal representation of the detected objects. We propose a novel active visual memory that moves the camera to detect objects in robot's surroundings and tracks their positions. This visual memory is based on a combination of multi-modal �iters that efficiently integrates partial information. The visual attention subsystem is distributed among the software components in charge of detecting relevant objects. We demonstrate the efficiency and robustness of this perception system in a real humanoid robot participating in the RoboCup sPL competition.},

author = {Martin, Francisco and Ag{\"{u}}ero, Carlos and Canas, Jose Maria},

doi = {http://dx.doi.org/10.1142/s0219843615500097},

issn = {0219-8436},

journal = {International Journal of Humanoid Robotics},

keywords = {Active vision,Humanoid robots,Robot soccer},

number = {1},

pages = {1--22},

title = {{Active Visual Perception for Humanoid Robots}},

url = {http://www.worldscientific.com/doi/abs/10.1142/s0219843615500097},

volume = {12},

year = {2015}

}[Bibtex]

@article{Orts2015,

abstract = {With the advent of low-cost 3D sensors and 3D printers, scene and object 3D surface reconstruction has become an important research topic in the last years. In this work, we propose an automatic (unsupervised) method for 3D surface reconstruction from raw unorganized point clouds acquired using low-cost 3D sensors. We have modified the Grow- ing Neural Gas (GNG) network, which is a suitable model because of its flexibility, rapid adaptation and excellent quality of representation, to perform 3D surface reconstruction of different real-world objects and scenes. some improvements have been made on the original algorithm considering colour and surface normal information of input data during the learning stage and creating complete triangular meshes instead of basic wire-frame representations. The proposed method is able to successfully create 3D faces online, whereas existing 3D reconstruction methods based on self-Organizing Maps (sOMs) required post- processing steps to close gaps and holes produced during the 3D reconstruction process. A set of quantitative and qualitative experiments were carried out to validate the proposed method. The method has been implemented and tested on real data, and has been found to be effective at reconstructing noisy point clouds obtained using low-cost 3D sensors.},

author = {Orts-Escolano, s and Garcia-Rodriguez, J and Morell, V and Cazorla, Miguel and serra-Perez, J A and Garcia-Garcia, A},

issn = {1370-4621},

journal = {Neural Processing Letters},

number = {2},

pages = {401--423},

title = {{3D surface reconstruction of noisy point clouds using Growing Neural Gas}},

volume = {43},

year = {2016}

}[Bibtex]

@article{Azorin2015,

abstract = {Plane model extraction from three-dimensional point clouds is a necessary step in many different applications such as planar object reconstruction, indoor mapping and indoor localization. Different $\backslash${\{}RANdom$\backslash${\}} $\backslash${\{}sAmple$\backslash${\}} Consensus (RANsAC)-based methods have been proposed for this purpose in recent years. In this study, we propose a novel method-based on $\backslash${\{}RANsAC$\backslash${\}} called Multiplane Model Estimation, which can estimate multiple plane models simultaneously from a noisy point cloud using the knowledge extracted from a scene (or an object) in order to reconstruct it accurately. This method comprises two steps: first, it clusters the data into planar faces that preserve some constraints defined by knowledge related to the object (e.g., the angles between faces); and second, the models of the planes are estimated based on these data using a novel multi-constraint RANsAC. We performed experiments in the clustering and $\backslash${\{}RANsAC$\backslash${\}} stages, which showed that the proposed method performed better than state-of-the-art methods.},

author = {Garcia-Rodriguez, Marcelo saval-Calvo and Jorge Azorin-Lopez and Andres Fuster-Guillo and Jose},

journal = {Applied soft Computing},

pages = {572--686},

title = {{Three-dimensional planar model estimation using multi-constraint knowledge based on k-means and RANsAC}},

volume = {34},

year = {2015}

}[Bibtex]

@article{Martinez2016ras,

abstract = {The semantic localization problem in robotics consists in determining the place where a robot is located by means of semantic categories. The problem is usually addressed as a supervised classification process, where input data correspond to robot perceptions while classes to semantic categories, like kitchen or corridor. In this paper we propose a framework, implemented in the $\backslash${\{}PCL$\backslash${\}} library, which provides a set of valuable tools to easily develop and evaluate semantic localization systems. The implementation includes the generation of 3D global descriptors following a Bag-of-Words approach. This allows the generation of fixed-dimensionality descriptors from any type of keypoint detector and feature extractor combinations. The framework has been designed, structured and implemented to be easily extended with different keypoint detectors, feature extractors as well as classification models. The proposed framework has also been used to evaluate the performance of a set of already implemented descriptors, when used as input for a specific semantic localization system. The obtained results are discussed paying special attention to the internal parameters of the BoW descriptor generation process. Moreover, we also review the combination of some keypoint detectors with different 3D descriptor generation techniques.},

author = {Martinez-Gomez, Jesus and Morell Gimenez, Vicente and Cazorla, Miguel and Garcia-Varea, Ismael},

journal = {Robotics and Autonomous systems},

pages = {641--648},

title = {{semantic Localization in the PCL library}},

volume = {75, Part B},

year = {2016}

}[Bibtex]

@article{Morell2015,

author = {Vicente Morell and Jesus Martinez-Gomez and Miguel Cazorla and Ismael Garcia-Varea},

journal = {International Journal of Robotics Research},

number = {14},

pages = {1681--1687},

title = {{ViDRILO: The Visual and Depth Robot Indoor Localization with Objects information dataset}},

volume = {34},

year = {2015}

}[Bibtex]

@inproceedings{Orts2015IJCNN,

author = {Orts-Escolano, s and Garcia-Rodriguez, J and Morell, V and Cazorla, M and saval-Calvo, M and Azorin, J},

booktitle = {Neural Networks (IJCNN), The 2015 International Joint Conference on},

title = {{Processing Point Cloud sequences with Growing Neural Gas}},

year = {2015}

}[Bibtex]

@inproceedings{RangelRobot2015,

author = {Rangel, Jose Carlos and Cazorla, Miguel and Varea, Ismael Garcia and Martinez-Gomez, Jesus and Fromont, Elisa and sebban, Marc},

booktitle = {2nd Iberian robotics conference},

title = {{Computing Image Descriptors from Annotations Acquired from External Tools}},

year = {2015}

}[Bibtex]

@inproceedings{Rodriguez2016,

Author = {Angel Rodriguez and Francisco Gomez-Donoso and Jesus Martinez-Gomez and Miguel Cazorla},

Title = {Building 3D maps with tag information},

Booktitle = {XVII Workshop en Agentes F�sicos (WAF 2016)},

Year={2016}

}[Bibtex]

@article{Rangel2016b,

author = {Jos\'{e} Carlos Rangel and Jesus Mart\'{i}nez-Gomez and Ismael Garc\'{i}a-Varea and Miguel Cazorla},

title = {LexToMap: lexical-based topological mapping},

journal = {Advanced Robotics},

volume = {31},

number = {5},

pages = {268-281},

year = {2017},

doi = {10.1080/01691864.2016.1261045},

URL = {

http://dx.doi.org/10.1080/01691864.2016.1261045

},

eprint = {

http://dx.doi.org/10.1080/01691864.2016.1261045

}

,

abstract = { Any robot should be provided with a proper representation of its environment in order to perform navigation and other tasks. In addition to metrical approaches, topological mapping generates graph representations in which nodes and edges correspond to locations and transitions. In this article, we present LexToMap, a topological mapping procedure that relies on image annotations. These annotations, represented in this work by lexical labels, are obtained from pre-trained deep learning models, namely CNNs, and are used to estimate image similarities. Moreover, the lexical labels contribute to the descriptive capabilities of the topological maps. The proposal has been evaluated using the KTH-IDOL 2 data-set, which consists of image sequences acquired within an indoor environment under three different lighting conditions. The generality of the procedure as well as the descriptive capabilities of the generated maps validate the proposal. }

}[Bibtex]

@inproceedings{garcia2014z,

author = {J. Garcia-Rodriguez and s. Orts-Escolano and N. Angelopoulou and A. Psarrou and J. Azorin-Lopez},

booktitle = {Journal of Real-Time Image Processing},

title = {{Real time motion estimation using a neural architecture implemented on GPUs}},

year = {2014}

}[Bibtex]

@inproceedings{Montoyo20143Registration,

author = {Montoyo, Javier and Morell, Vicente and Cazorla, Miguel and Garcia-Rodriguez, Jose and Orts-Escolano, sergio},

booktitle = {International symposium on robotics, IsR},

title = {{Registration methods for RGB-D cameras accelerated on GPUs}},

year = {2014}

}[Bibtex]

@article{Garcia2016RTIP,

title = "Interactive 3D object recognition pipeline on mobile GPGPU computing platforms using low-cost RGB-D sensors",

journal = "Journal of Real-Time Image Processing",

volume = "14",

number = "3",

pages = "585--604",

year = "2018",

note = "",

doi = "10.1007/s11554-016-0607-x",

author = "Albert Garcia-Garcia and sergio Orts-Escolano and Jose Garcia-Rodriguez and Miguel Cazorla",

}[Bibtex]

@inproceedings{RodriguezLera2014,

author = {Lera, Francisco Rodriguez and Garcia, Fernando Casado and Esteban, Gonzalo and Matellan, Vicente},

booktitle = {second International Conference on Artificial Intelligence, Modelling and simulation},

title = {{Mobile robot performance in robotics challenges: Analyzing a simulated indoor scenario and its translation to real-world}}

}[Bibtex]

@inproceedings{MartinRico2014,

author = {Rico, Francisco Martin and Lera, Francisco J Rodriguez and Matellan, Vicente},

booktitle = {IEEE International Conference on Autonomous Robot systems and Competitions (IEEE ICARsC) 2014},

title = {{MYRABot+: A Feasible robotic system for interaction challenges}}

}[Bibtex]

@inproceedings{RodriguezLera2014b,

author = {Lera, Francisco J Rodriguez and Casado, Fernando and Hernandez, Carlos Rodriguez and Matellan, Vicente},

booktitle = {XV Workshop en Agentes Fisicos (WAF 2014)},

title = {{Low-Cost Mobile Manipulation Platform for RoCKIn@home Competition}}

}[Bibtex]

@inproceedings{MartinRico2014b,

author = {Rico, Francisco Martin and Mateos, Jose and Lera, Francisco J and Bustos, Pablo and Matellan, Vicente},

booktitle = {XV Workshop en Agentes Fisicos (WAF 2014)},

title = {{A robotic platform for domestic applications}}

}[Bibtex]

@article{juanfelipe2015,

author = {Juan Felipe Garcia sierra and Francisco Javier Rodriguez Lera and Camino Fernandez and Vicente Matellan},

journal = {IEEE Revista Iberoamericana de Tecnologias del Aprendizaje},

number = {1},

pages = {19--25},

title = {{Using Robots and Animals as Motivational Tools in ICT Courses}},

volume = {10},

year = {2015}

}[Bibtex]

@inproceedings{Cazorla2015Caepia1,

author = {Cazorla, Miguel and Garcia-Rodriguez, Jose and Plaza, Jose Maria Canas and Varea, Ismael Garcia and Matellan, Vicente and Rico, Francisco Martin and Martinez-Gomez, Jesus and Lera, Francisco Javier Rodriguez and Mejias, Cristina suarez and sahuquillo, Maria Encarnacion Martinez},

booktitle = {XVI Conferencia de la Asociacion Espanola para la Inteligencia Artificial (CAEPIA)},

title = {{sIRMAVED: Development of a comprehensive robotic system for monitoring and interaction for people with acquired brain damage and dependent people}},

year = {2015}

}