Communication aids for people with speech or hearing impairments

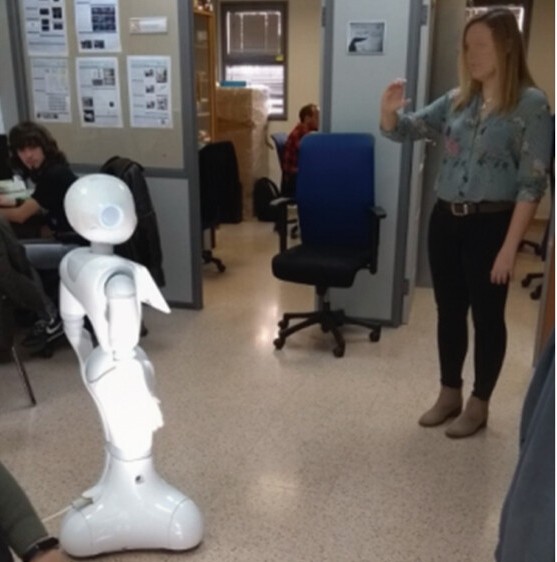

This use case applies AI-based simulation technologies to the design and optimization of assistive systems for people with disabilities, focusing on how users with different sensory, cognitive, or motor limitations interact with intelligent devices and environments.

By simulating virtual users with diverse accessibility needs, the system allows researchers and developers to test, evaluate, and refine assistive technologies—such as adaptive communication interfaces, intelligent prosthetics, voice-controlled systems, or gaze-based interaction tools—before deploying them in real settings. These simulations integrate AI models that reproduce realistic behaviors, reaction times, and emotional or physical constraints, helping to predict how different users might experience or struggle with a given design.

The goal is to create inclusive, human-centered assistive solutions that dynamically adapt to individual abilities and contexts. Through AI-driven analysis, developers can identify usability barriers, optimize accessibility features, and design personalized feedback mechanisms, ultimately enhancing autonomy, comfort, and quality of life for people with disabilities.